Article Review: Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology by Mercedes-Benz AG and TU Berlin

2022-03-21

Hypergolic (our ML consulting company) works on its own ML maturity model and ML assessment framework. In the next phase, I will review three more articles:

Our focus with this work is helping companies at an early to moderately advanced stage of ML adoption, and my comments will reflect this. Please subscribe if you would like to be notified of the rest of the series.

Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology

This article is more about methodology than maturity. I think this is justified in this stage of the topic review. The authors apply a CRISP-DM framework [wiki] from data mining to ML and add a quality assurance component.

The authors identify two major problems:

CRISP-DM doesn’t deal with long term application and deployment.

It doesn’t deal with QA.

These are both critical for productionised ML, so they must be addressed.

In our definition, quality is not only defined by the product’s fitness for its purpose, but the quality of the task executions in any phase during the development of an ML application. This ensures that errors are caught as early as possible to minimize costs in the later stages during the development. The initial effort and cost to perform the quality assurance methodology is expected to outbalance the risk of fixing errors in a later state, that are typically more expensive due to increased project complexity.

2. Related Work

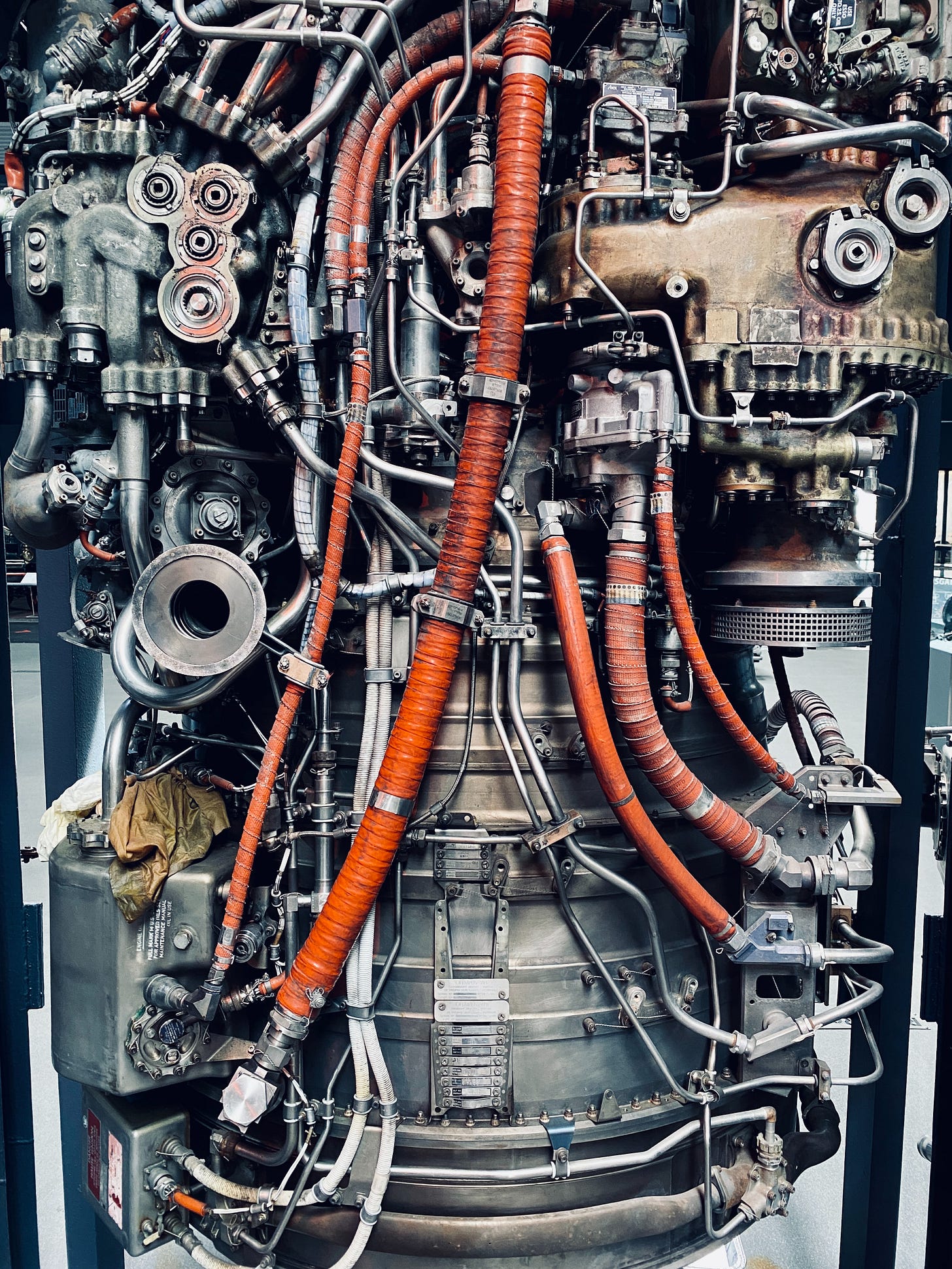

The above diagram succinctly compares the primary differences between DM and ML. ML projects have a more complex offline structure and an extra component in deployment.

3. Quality Assurance in Machine Learning Projects

As a first contribution, quality assurance methodology is introduced in each phase and task of the process model. The quality methodology serves to mitigate risks that affect the success and efficiency of the machine learning application. As a second contribution, CRISPML(Q) covers a monitoring and maintenance phase to address risks of model degradation in a changing environment.

This sequence happens for each step of the process:

One major problem with their approach (and CRISP-DM) is the strict sequentiality of steps. This breaks agility because a later stage cannot start before a previous one is finished. This is true even as the process itself is drawn in a circle. In a genuinely agile CI/CD workflow, the steps of the process happen _at the same time_ and infrastructure needs to support this. There is a temptation to define the project as a one-off iteration and turn it into a waterfall project.

Moreover, business and data understanding are merged into a single phase because industry practice has taught us that these two activities, which are separate in CRISP-DM, are strongly intertwined, since business objectives can be derived or changed based on available data.

This is a very good idea. Usually, having as few steps as possible helps increase iteration speed. This might have been motivated by the slow iteration speed above.

3.1. Business and Data Understanding

3.1.1. Define the Scope of the ML Application

CRISP-DM names the data scientist responsible to define the scope of the project. However, in daily business, the separation of domain experts and data scientists carries the risk, that the application will not satisfy the business needs. Moreover, the availability of training samples will to a large extent influence the feasibility of the data-based application.

This is another excellent initiative. Domain-DS collaboration is crucial to an ML project’s success. One key area of this collaboration is the labelling and analysis of labelled data.

3.1.2. Success Criteria

They identify three levels:

Business Success Criteria: For example, failure rate less than 3%.

ML Success Criteria: A statistical metric

Economic Success Criteria: A KPI metric

In addition, each success criterion has to be defined in alignment to each other and with respect to the overall system requirements to prevent contradictory objectives.

This is again a very good approach. We have already seen merging data and business understanding and making Domain Experts and Data Science work together, enabling the unification of project goals. This makes the project anchored to a common understanding early on that both teams can align to.

3.1.3. Feasibility

Data Availability: quality and quantity might be mitigated by adding domain knowledge

It is very hard to make the call on this before a project reaches a later stage.

Applicability of ML technology: Literature search or a POC

Given the freshness of the field, teams frequently need to be the first to attempt the solution.

Legal constraints: Legal constraints are frequently augmented by

ethical and social considerations like fairness and trustLegal issues must be part of the success criterion and constantly re-evaluated.

Requirements on the application: Robustness, scalability, explainability and resource demand.

These are engineering requirements that will be hard to decide before the project is in a later stage.

It is very hard to evaluate an ML project before it is on the way. Risk-averse feasibility testing might lead to reduced innovation. An ML team’s goal should be to get to an MVP stage as fast (and cheap) as possible and re-evaluate the situation.

This doesn’t mean that fairness and legal issues can be disregarded but expressed as (business) success criteria and decide on go/nogo later.

3.1.4. Data Collection

This is a bit too short, emphasising that collecting data can be challenging and a major source of delay. Data driven organisations should be proactive and opportunistic with collecting data to reduce the startup time of future ML projects.

They mention data version control, but this is detailed later in reproducibility.

3.1.5. Data Quality Verification

A ML project is doomed to fail if the data quality is poor. The lack of a certain data quality will trigger the previous data collection task.

Interesting concept to codify triggering data collection. I am not sure how feasible it is in practice. With cheap storage and compute in general, using as much data as possible is a good idea, but I can understand that labelled data is a scarce resource, and these processes can trigger the collection of more. This can also be done in an agile/CICD manner for faster cycle time.

They identify three points in data quality verification.

Data description

Data requirements

Data verification

The bounds of the requirements has to be defined carefully to include all possible real world values but discard non-plausible data. Data that does not satisfy the expected conditions could be treated as anomalies and have to be evaluated manually or excluded automatically.

And this is precisely the problem. Usually, the requirements are either too convoluted to maintain or too schematic to catch enough problems. Anomaly detection in large scale evaluation and production can act as a warning system, and the focus should be on analysing success criteria (including legal and fairness issues).

3.1.6. Review of Output Documents

This section reviews just the current phase jointly by business and DS before greenlighting the next one. I think this is when success criteria, risks and decisions on the next steps happen. An agile process reviews _all_ phases at once, acting as a single point of control for the entire project.

3.2. Data Preparation

This is a long section mentioning the following topics:

Select Data

Feature selection

Data selection

Unbalanced Classes

Clean Data

Noise reduction

Data imputation

Construct Data

Feature engineering

Data augmentation

Standardise Data

File format

Normalisation (scaling)

The content of this section is fairly standard. On the other hand, it doesn’t have much to say about their relationship to CRISP-ML(Q). Primarily the success criteria should drive this section. My critique is that it is hard to make a call here about continuing without actually doing the modelling step. In practice, one tries to reach a completely evaluated MVP model and assess success and feasibility there.

3.3. Modeling

The choice of modeling techniques depends on the ML and the business objectives, the data and the boundary conditions of the project the ML application is contributing to. […] The goal of the modeling phase is to craft one or multiple models that satisfy the given constraints and requirements.

The section mentions the following aspects of modelling:

Literature research on similar problems:

Wasn’t this supposed to happen at the feasibility stage?

Define quality measures of the model.

They define five relevant aspects: Performance, Robustness, Scalability, Explainability, Complexity, Resource Demand.

These are all good points. I’d separate DS (Performance, Robustness, Explainability) and engineering problems (Scalability, Complexity, Resource Demand) as they allow and require different treatments.

Model Selection

Incorporate domain knowledge

This is an important step in a productionised model aided by the close relationship with domain experts as recommended above. One must still be careful about incorporating false assumptions.

Model training

Using unlabeled data and pre-trained models:

The expensiveness of labelled data is an economic problem and not a technical problem. Only use more complex techniques if it makes business sense.

Model Compression

Ensemble methods

This is a core technique (also mentioned by the Google articles I already reviewed). The technique allows solving subproblems and, through that, incorporating domain knowledge.

3.3.1. Assure reproducibility

Method reproducibility: The article suggests extensive documentation and description. In a modern application, this is replaced by version controlling the entire system and only explaining complex parts in the documentation.

Result reproducibility: The authors refer to numerical instability related to random number generator seeding affecting parameter initialisation and train/evaluation splitting, which is indeed a genuine concern.

Experimental Documentation: This is part experiment tracking and part administration. In the second part, I would focus on answering critical decisions and reasons instead of just writing everything down. Code and evaluation dashboards are a better way to oversee a project.

I would have used modern terminology here (experiment tracking, evaluation stores), but that is a minor concern. The main one is reducing bureaucracy by focusing on code quality and versioning rather than writing down everything again in a hard to refactor documentation format.

3.4. Evaluation

Validate performance

Determine robustness

Increase explainability for ML practitioners and end-users

Compare results with defined success criteria

This section is pretty standard as well. I would say that explainability is a design feature that needs to be addressed earlier, or it will be questionable what you can achieve here (this includes using more involved techniques like Shapely coefficients).

This is also the point to validate the quantitative aspects of reproducibility from 3.3.1.

3.5. Deployment

Define inference hardware: This should happen at the project start, not after the model is already trained and evaluated.

Model evaluation under production condition: This is the famous train/serve skew. The team is in charge of the training data, if they suspect

Assure user acceptance and usability

Minimise the risks of unforeseen errors: Well, thanks. They refer to rollback/fallback strategies which are a good idea, but they don’t minimise risk; they minimise _losses_.

Deployment strategy: This is A/B/Canary testing; I would tie it with the previous rollback/fallback strategy.

In an agile project, these macro steps are more crammed together, so they might as well happen at the same time rather than sequentially. This allows smaller subproblems to be solved at their better place. Don’t wait to think about deployment until you have the model in hand. Or recognise train/serve skew when the model is already deployed.

3.6. Monitoring and Maintenance

Non-stationary data distribution

Degradation of hardware

System updates

Monitor and Update

After a lengthy discussion before this section is too short. Change is inevitable in a dynamic environment, and agile iteration is the solution to keep the model performing. The best strategy is to cram all previous steps and get to production with an MVP model and iterate there.

The monitoring section also mixes technical (DevOps) and quantitative (DS) errors. I would separate these.

Model monitoring is an ongoing activity where anomaly detection alerts the team of sudden changes and errors. The monitoring is usually mixed with the evaluation part as these are similar and interrelated activities. An ML product is never finished, so the team can not take their eyes off it.

Summary

CRISP-ML(Q) is an update of the CRISP-DM framework to quality-assured ML products. The authors do a great job identifying and filling the gaps to achieve an actionable framework.

Some takeaways:

Close business and DS collaboration is recommended on project definition, success criteria, risks, data acquisition and modelling. This is key for any modern DS team.

Agility over process. This is my main critique of the paper. One would expect more reflection on how to improve a model already in production. The reader might think this is a waterfall process.

Monitoring, anomaly detection, evaluation, train/serve skew are related concepts and must be addressed continuously with a single framework.

Code rather than document-based reproducibility.

End-to-end planning must happen early to enable early productionisation and real-life feedback to update the MVP.

I hope you enjoyed this summary, and please subscribe to be notified about future parts of the series:

Thank you for this insightful article