Clean Architecture in Data Science (Part 1)

2022-04-24

Since the rise of MLOps about two years ago, the internet has been littered with various “MLOps stacks”. These are usually dominated by BigTech solutions for their particular problems.

The other trope is VC backed MLOps SaaS companies glueing to each other to look comparable to one-stop-shops like SageMaker or DataBricks.

Neither of these are helpful from a Data Scientist's perspective.

What’s the problem?

Most organisations are at the beginning of their ML journey. They might have found some use cases for ML, even created a POC and put it into production with great effort and some ad-hoc engineering.

The maturity of their understanding of their own needs and benefits in the area is developing. Making commitments at this stage to any infrastructure is premature.

It is important to mention that I am looking at this from the perspective of the Data Scientist and data products, not from MLOps platforms. These MLOps platforms are only as useful as much as they can accommodate enterprise complexity ML products. At an early maturity stage, the products sell the platform and not the other way around.

Products are part of a value chain, while platforms are a capability that needs to be exploited to make economic sense.

Continued investment can only happen if the company doesn’t lose interest in advanced solutions. Despite this, I often see young ML teams investing all their efforts into MLOps platforms before they even have one productionisable idea.

But if you need a platform to productionise an idea and you need a product to invest into a platform, isn’t this a chicken and egg situation? Yes, it is. And here comes Clean Architecture to the rescue.

What is Clean Architecture?

This concept has many names in software engineering: Hexagonal Architecture, Layered Architecture, Plugin Architecture, etc.

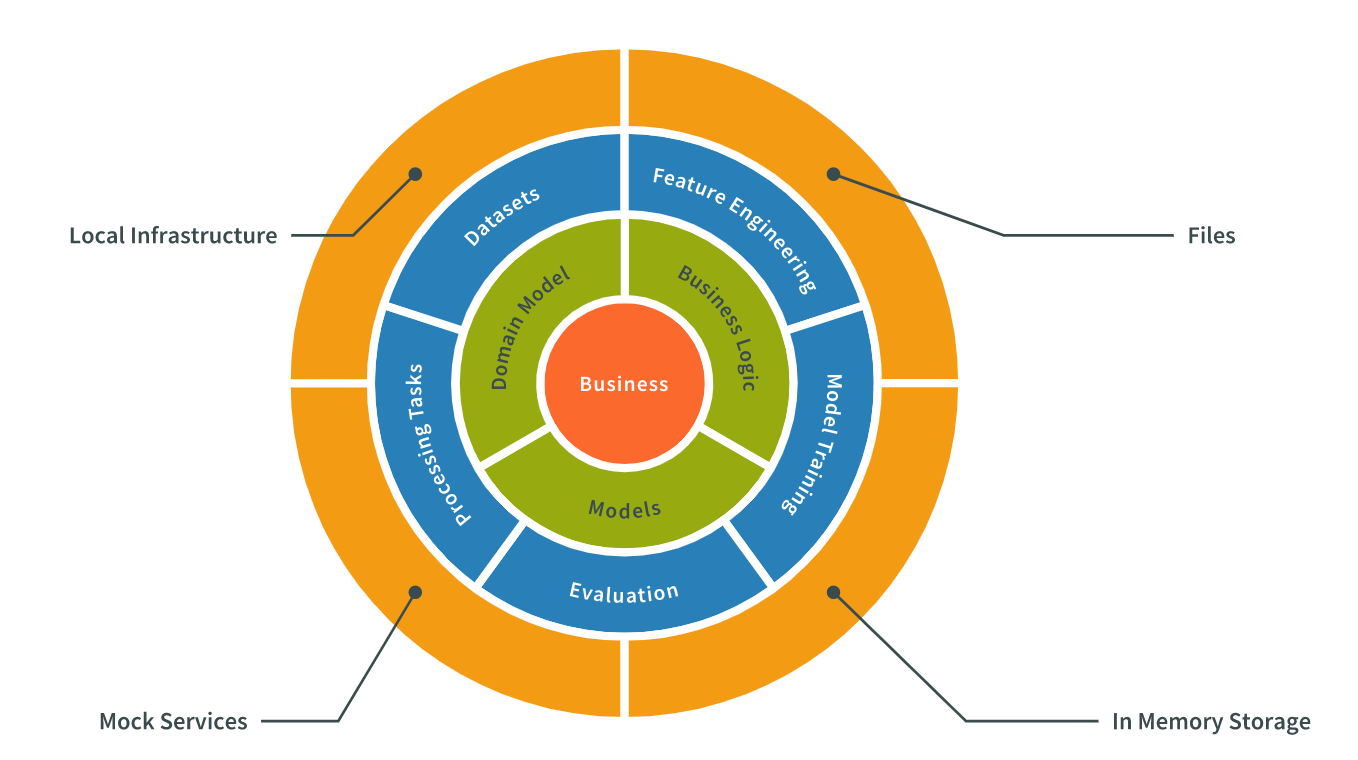

Clean Architecture enables you to separate your solution into different abstraction levels: You will have one place for your business logic, one for managing data, training models, etc. You will also have separate ways to connect to external infrastructure.

Ok, this is pretty obvious, but the question is: How?

Dependency Inversion

We at Hypergolic are huge advocates of applying software engineering techniques to Data Science. While working on our course (the above image is from our training material), we were thinking of what is the single most important feature to focus on.

And that’s Dependency Inversion. The concept is very simple: Instead of directly calling a function, you get an adapter, and you call that adapter’s interface. See the example below:

This is nothing short of a revolutionary idea. Instead of fixing the behaviour of your program, you allow it to be modified as needed without any change.

Different behaviours can be constructed to fit the caller’s intentions.

Scaling it up

Applying this at scale leads to the Clean Architecture. In CA, everything is a class. When you write your main program (transform data, train models, the models themselves), you call any dependencies through injected interfaces.

When someone wants to run your program, they instantiate this class. Different behaviours can be constructed as needed without changing the original code by plugging in different adapters. The code can be reused in contexts that do not exist yet by writing adapters to future components.

For example, the following diagram shows using the same code set up in a test context:

But this can be pushed even further: with Factory and Strategy patterns, you can also create different versions of your business logic and your modelling algorithms as well. Do you want to experiment with a different set of features or different types of models? Just use the Strategy pattern, create a different context and plug in different adapters. All of this while you are still in a generic programming environment rather than a restricted YAML/DSL based situation.

Main Benefits

Code reuse in different contexts:

The same code will run in test, training, model evaluations and production → No rewrites, no friction if you want to iterate.

Delayed decisions:

MLOps platform vs ML products chicken and egg solutions resolved → You don’t need to decide how your stack will look just yet. Use adapters to whatever you have at the moment and swap them out later.

Reduced dependence:

Making infrastructure decisions before implementing multiple use cases is risky → Use existing infrastructure through adapters enables you to replace them when you have a better idea of your needs and identified bottlenecks.

Cleaner structure:

Your product code is generic, you deal with business problems and modelling, and you don’t worry about low-level details. Your abstraction levels are separated, which reduces cognitive load. You don’t need to keep every last detail in your head at all times.

Better integration with other (MLOps/SWE) teams and better internal cooperation:

Because the codebase is functionally separated, maintaining the components can be outsourced to other teams. They can work on their own part without worrying that they break something else.

Summary

Since developing the details of our approach we identified Clean Architecture as an incredibly productive paradigm. This enables us to move very fast in the early stage of the modelling lifecycle with minimal investment while maintaining quality of code that can be pushed to production later without costly rewrites.

The uncertainty in any ML product requires you to jump back to earlier phases of the modelling process to apply new insights and push these through the pipeline in a safe way. If you have expensive friction points, this will slow down your iteration loop. Clean Architecture helps you to avoid this.

Lastly, reusing the same code in different contexts enables us to set up cheap and fast tests that we use to refactor the code, further reducing the effort to maintain the codebase.

If you want to know more about our training and services, get in touch at hypergolic.co.uk or LinkedIn.

This was just a brief introduction to Clear Architecture in Data Science. In the following posts of the series, I will be exploring the topic in more detail.