How can a Data Scientist refactor Jupyter notebooks towards production-quality code?

[Edit]

Join our Discord community, “Code Quality for Data Science (CQ4DS)”, to learn more about the topic: https://discord.gg/8uUZNMCad2. All DSes are welcome regardless of skill level!

See also: https://laszlo.substack.com/p/refactoring-the-titanic

[Edit]

The goal of maintaining production-quality code is that you can change your codebase with confidence, knowing it still adheres to already assumed specifications. This allows you to respond to new requests, attempt new solutions and back down from them if you don’t get it right.

Jeff Bezos differentiates between Type 1 (high-risk, irreversible) and Type 2 (low-risk) decisions. With the help of a well-maintained codebase, you can turn your hard choices and difficult changes that mess up your notebook into easier, simple ones. You will not worry about changing your code anymore and worry you can’t get back to a consistent state.

You can try new features and modify assumptions by targeted modifications. These will only change part of the relevant code while keeping everything else fixed and reusable.

What will you get out of this?

Data science workflows often try to solve an entire task in one go.

Process data, do calculations and do visualisations to understand what’s going on. These are different abstractions levels that have different roles but got coupled in a single notebook. When you need to productionise part of the workflow, it is entangled with the part that doesn’t need to be shipped.

The above workflow allows you to rapidly clean up your code and separate parts into different components. It also gives you a template that you can use next time as a starting point and maintain your entire project in a consistent state for its lifecycle.

Philosophy of refactoring

There are multiple ways to implement a specification that are functionally equivalent. But not all of these ways are equally good for non-functional reasons. Some of them are harder to understand, some of them are harder to change. Good luck trying to implement new requirements in six months. Problems arising from these non-functional reasons are collectively known as “tech debt”. Refactoring is an activity removing tech debt and paying yourself forward by making your life easier next time you are working on the code.

Refactoring allows you to change the code for non-functional reasons while testing that its functional behaviour didn’t change. During this exercise, the behaviour of your code will not change. It won’t be too long in a state when it is failing your assertions. Select assertions that verify that this is the case. Any time you are lost, roll back with git to a state where tests are passing again. No problem at all.

Prerequisites

I assume you know how to use git for version control and the Typer package. I also expect you to be able to set up a python virtual environment.

Virtual environments are the bread and butter for maintaining a codebase. Knowing that your work only depends on the packages listed in the `requirements.txt` file is part of controlling your work. Dealing with the intricacies of virtual environments is out of scope for this article (I might write about it later). Still, I recommend creating a standardised setup at least team-wide (standard place for shell scripts, directory structure, virtual environment generating scripts etc.). This is part of “infrastructure”, and you should invest in doing it well, exactly only once.

At all the time during refactoring, you should be able to delete your virtual environment and regenerate it from scratch. This ensures it is up to date for runtime purposes.

First Step: Preparations

Some kind of testability is fundamental for successful refactoring.

Unfortunately, most Data Science code is not written with tests due to its experimental and statistical nature. Yet, some low effort testability must be the first step to any effort.

According to Kent Beck (who made this revelation while working at FaceBook), testing can be relaxed if you have an alternative way of quickly validating that your code would still work in production. Testing is a best-effort verification that you didn’t make a braking mistake in your code, and getting started is more important than being dogmatic.

So now the actual first step:

Save your calculated outputs. Write assertions that loads these outputs and compares them to your calculated outputs.

Find a small set of variables that are very likely to change if you change the code calculating them. Don’t think anything fancy; just do the bare minimum. You can add more checks later or refactor the asserting code as well. Something like this for a numpy array:

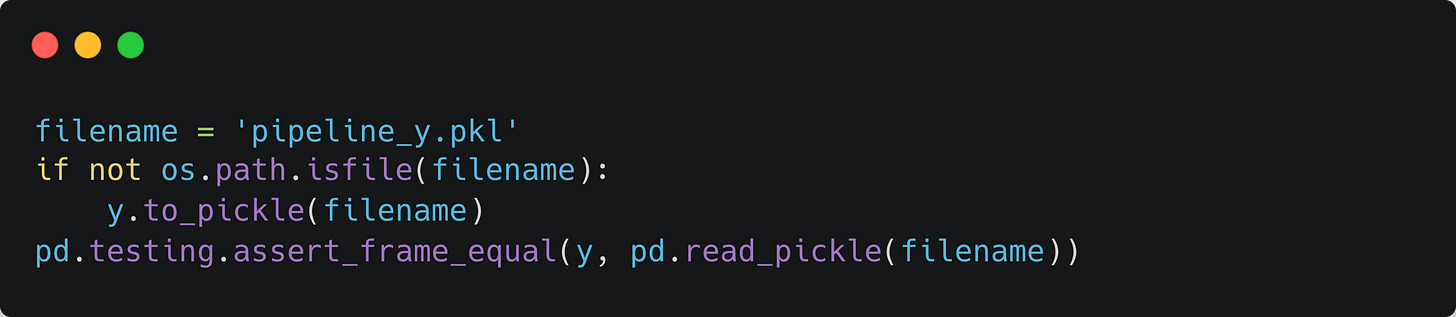

or with pandas

The goal is to make it as low effort as possible but still do the job. You can always refactor it later (like the multiple uses of filename constants above).

Second Step: Setup

Create a `requirements.txt` and a shell script for creating the virtual environment (if you need an example, try here). Start filling the requirements file. Only add packages that you will need. If you forget to add something, you will be notified anyway when trying to run.

Create a python file and set up a Typer script. Typer has a lot of bells and whistles. I recommend getting familiar with it. Please see the documentation at https://typer.tiangolo.com/.

Use some meaningful names here for the files and the `Process` class, but you can also change them later. Don’t worry about the parameters yet; you will have time later to refactor those to something nicer. Also, don’t worry about why you have an extra layer of `main` and `process`; this will be clear at Step Four. Just make sure you are using git and keep committing your code.

Third Step: Leaving Jupyter

Now, this is the hard part. Hopefully, your notebook is in a sequentially runnable state (that is, you can do a “Kernel->Restart & Run All” on it, which is a desirable thing anyway). If not, try to make a best effort to copy the code into the `run()` function of the `Process` class above in the order as they are intended to run. Move the import statements as well to the top of the script and add them to the `requirements.txt` as well. Don’t forget to bring over the assertions that you wrote in the first step. Keep rebuilding the virtual environment with the above script if you find a missing dependency, and keep running the main script to check if the assertions pass.

Also, make sure you are committing your code regularly. If you are rapidly changing your codebase frequent commits ensure you can recover if make a mistake. Remember turning Type 1 decisions into Type 2 ones!

What to do with visualisation code? The question is if their existence affects passing the assertions? If not, leave them out. You can later clean your notebook by removing all the code you refactored into scripts and keeping only the analysis and visualisation parts.

Side Step: Simple git and code review process

From now on get used to the following simplistic git workflow:

Start from the latest version of the main branch. (git checkout main, git pull)

Create a new branch (git checkout -b new_branch)

Write your changes while running the main script (more on this later)

Add changes to git and keep committing (git add ..., git commit -m …)

When done, create a new pull request

Ask someone to review your code if it makes sense

Ask reviewer to merge it to the main branch

Redo from start

It is an important step to get used to someone else looking at your code and justifying your choices. That person doesn’t need to be a super coder. The only thing that matters is that the person can understand what your code does. This is a readability check that your intentions are clear from your changes. An external pair of eyes is the best for this because you are too close to the action.

Is it a hassle for someone else? Pay it back by helping them review their code. It is a great opportunity to learn from each other. This also helps homogenise your team’s codebase and converge towards common standards.

Fourth Step: Decouple from external systems

Do you get your data from a database? Directly loading a CSV? Or calling a REST API? This is called “coupling”, your code that is supposed to do some calculations or data processing now also deals with directly getting this data. The data source is hardcoded into the script that processes it, so if you would like the option to get that data from somewhere else (like an experimental source instead of a production database), you can’t do it without modifying the code. This is, BTW, the violation of O of SOLID principles, also known as OCP (Open-Closed Principle). The actual OCP has a far wider meaning, but this is a simple first step example of it.

What’s the solution then? Getting the data is a decision and as we know, “Wrap your hard decisions with a class”. And that’s exactly what we are going to do.

Getting the data and the source of this data are separate issues. If your pipeline needs a dictionary to work with, does your code care if the dictionary came from a database or a CSV? Unlikely.

This is what is called separation of concerns. We will ensure that the information on how to get the data from a source (e.g. connection string of a DB or credentials for a REST API) is collected into one class, and that class returns the data we need but not anything else. Instead of:

Try something like:

This is better but not ready. All of the issues related to the database is in that single class, and the location where you use the name is just one line. But what if you suddenly want to load those names from a file? Your database is still hardcoded into your processing script.

This is the concept of dependency inversion (The D from the SOLID principles, also known as DIP). If SQLNameLoader were a variable instead of a piece of code, its value could be changed in runtime. You don’t need to decide its value until the code actually runs (or at least not now when you are writing the code).

But just how to do that? Well easy, just swap the class to a variable! Do you remember the extra layer of main and Process classes? This was the reason. Let’s see in practice:

This means that the writing of the code is decoupled from the usage of the code. If you suddenly want to use the processing pipeline for a different purpose, you can swap the variable to a different implementation, but the pipeline shouldn’t change.

This is again an example of the Open-Close Principle. The pipeline is open for extension (you can add new data sources) but closed for modification (you can do this without writing into the actual run() function).

The paradigm for this is “Complex behaviour is built not written.” meaning complicated functionality is composed of smaller parts as needed when needed and not written into one file.

Repeat this for all external connections as needed. This is also a very general principle that you can repeat any time if you need optionality. As with all tech debt, you need to use economics to figure out if something is worth decoupling or not (see also YAGNI = “You ain’t gonna need it”), but with the refactoring machine, you built, this won’t be a problem.

Fifth Step: Write an actual test

So far, all these tests actually called the external systems; this is less than ideal as you potentially disturb production systems with your frequent test runs. Also if your original notebook was running production-grade calculations potentially each run can take a long time.

The goal of refactoring is to move the code around without changing its behaviour and do it in the most convenient (fastest) manner. You will employ the same trick as you did with the assertions: save the input and load that instead of connecting to the real system. As your code is decoupled from input sources, this won’t be a problem.

Create a new python file and Typer script with:

So now, if you run `test_main`, it will save the results the first time and then use the file from then on. It is possible that running the pipeline takes a long time, and just because you rename a variable (typical in refactoring), you don’t want to rerun the entire calculation. This is what the `sampleCount` variable is for. But here is a problem, the assertions are part of running the pipeline. This coupling needs to be sorted out before you can speed up testing with `sampleCount`.

There are two solutions: Return the asserted variables with `run()` and move the assertion code to `test_main()` from `run()` and handle `sampleCount` during the assertions in `test_main`.

Alternatively, and this is a question about how you want to use `Process` in the future, you can decouple outputting the results with an “OutputSaver” class and write a “TestOutputSaver” that runs the assertions instead of saving the results. Make sure `TestOutputSaver` respects the `sampleCount` variable.

After you move assertions out from `run()`, the `main` script is now free from the testing specification, and that is the production version of the code. You can iterate on it and still verify that it will run correctly if you move it to production, but the testing code is not part of it. And this is the purpose of the whole effort.

Next Steps

The code now is in a refactorable state. You can safely make changes, run the tests and either commit your changes or revert back to a state that passes the tests. You don’t need to worry about changing your code anymore.

This article only skimmed what refactoring is about, and even there, I needed to make shortcuts, or this post would be 10000 words long. None of the changes above addressed the quality of the original code; it just dealt with some coupling issues. There are plenty of further techniques to make your code more readable, flexible and robust. In the next part, I will write about code smells, how to identify them and what can you do to avoid them. I will also write about (the relevant parts) of design patterns.

Please subscribe to be notified about them. Also, if you give it a try and have more questions, don’t hesitate to write them in the comment section.

Thanks for mentioning Typer! I came across it years ago when I was first starting out with FastAPI but never thought about it too much. I should have given it more attention given the quality of what tiangolo does but this was a welcome reminder to re-aquaint myself with it.

Hello, Laszlo. Thank you for the material, I have a couple of questions.

1. What exactly do you assert, comparing "y" with the optimal model results pkl file? Model can produce metrics, feature importances, predicted values, etc. What should I consider?

2. In your assertion code "y" stands for predicted labels, I assume. So it returns false if one of predicted labels is not equal to the one from the ideal-model (pkl file). But how can it help in testing? I won't even know which value is wrong and, especially, why is it so.

3. You have get_names methods in your TestNameLoader and SQLNameLoader classes. I am pretty sure, it breaks one of the OOP principles.

4. Same for the Process's run() and the Typer's run() methods.

5. I don't see the purpose of the TestNameLoader class. It only saves the dataset into a pickle file and returns 0-sampleCount rows from it. Shouldn't you name it "Dataset_file_loader" instead?

Maybe I misconcept something.