Need for Speed: Why High Quality Code Matters in Data Science

2021-06-14

Need for high quality coding for productionised machine learning systems are frequently mentioned in online content but rarely with practical takeaways or justification.

Terminology from software engineering like SOLID, Design Patterns or TDD is thrown around without explaining how it applies to data-intensive workflows. After a popular conversation and the meeting of minds at the great MLOps.community, an entire channel was dedicated for the topic to clear this up. The participant’s goal is to develop material that Data Scientists can use to improve their coding skills and improve their efficiency in delivering and maintaining solutions.

This is the first blog post on the topic and will define the context and the justification of the practice in machine learning.

Once upon a time, there was the analyst…

Most Data Science teams originate their existence from big data analytics and statistics.

They were reinforced by freshly minted graduates from academia. But, unfortunately, both of these group’s workflows are tuned for one-off delivery of results. Analysts create reports, and academics create journal papers.

Neither of them needs to prepare for long term maintenance and iteration, which is a must in modern product management.

Productionised machine learning is the continuation of software engineering by other means

All of this was changed when data-intensive cloud applications and cheap computing power enabled productionised machine learning.

Products and features were defined not by steps of commands (the “imperative” paradigm) but only through input-output data. Hence, we can call these “data defined products”. This is a beneficial way of solving problems as the problem definition (the dataset) is separate from the implementation (the model). This is known as the “declarative” paradigm.

This new mandate for doing machine learning requires new ways to solve problems.

Key aspects of software development

Traditional waterfall software development is outdated in the current fast-changing and always-online environment.

There is no time to collect specification and write code and have a yearly release cycle. Instead, modern software engineering employs continuous integration and delivery (CI/CD) practices to push new features to the users and evaluate the value they add through A/B tests on KPIs. All of these while still maintaining a 24/7 uptime and fix bugs on the fly.

Agile software development summarised this in the following principles:

Unplanned changes are inevitable

Code will be read 10x more than written when engineers make changes

Continuous delivery and always-in-production is a must

Efficient way to test if a change doesn’t violate any previous assumptions is a must

Features of software development

Software engineers spent the last 20 year developing frameworks to solve the problems above.

The resulting solutions have many names: test-driven development, extreme programming, agile, lean, continuous integration and delivery, but they essentially all address the above principles. So instead of worrying about names, let’s list the primary activities that software engineers practice to overcome difficulties.

We will see in the next section how these need to be modified for productionised machine learning.

To simplify changes: implement testing

Testing allows a programmer to quickly verify if their recent change didn’t break any previous assumptions about the solution. They hope that the test environment is as close to the production as possible and won’t break there either. Then, to write a new feature, they include a test for this feature as well so programmers in the future can verify that their changes don’t break this feature either. And the cycle continues until infinity.

To enable testability: employ decoupling

Decoupling allows the same code that will eventually run in production to be set up in a test environment and tested without modification. This allows alignment between the test and production environments but doesn’t need untestable dependencies (like writing into a live database). Decoupling also helps to simplify changes as decoupled components are less reliant on each other, therefore, changeable independently.

To maintain quality: use refactoring

Code runs 24/7, and fixes are done on the fly.

There is no version 2 when the entire codebase is thrown away and started from scratch. The same code is read all the time, again and again. Anecdotal estimations say that code is read 10x more than written. Investing in readability is, therefore, a high value activity. Given the simple testability of the code, changes are relatively easy to do through a set of simple steps and guidelines: Identify code smells, apply a set of code changes and run tests. Practice this until the code is deemed easily readable. Decoupling also helps readability by establishing “layers of abstraction” and decomposing the problem into independent parts.

How machine learning is different from software development

The primary difference stems from the declarative nature of machine learning.

Data defined products are difficult to be tested. Instead of a series of checkpoints where you can test behaviour (imperative paradigm), all you have is input-output data and a single implementation of mapping between them.

There must be a workaround to achieve fast testability.

Some parts of the code are still imperative. For example, simple algorithms and deterministic feature calculations can be unit tested. If you successfully employ decoupling, you can create a test environment to plug in smaller datasets into your data pipeline and test if it runs or fails (ignore quantitative results for now). Running the same pipeline with larger datasets will deliver quantitative results. Eventually, you test your pipeline in production first in shadow mode then in an A/B test to make sure it won’t break an actual KPI.

All that happens is that you perform tests that are more and more similar to the production environment.

Suppose you rename a variable or refactor a for-cycle. In these cases, you probably don’t expect that a production KPI radically changes, so a more straightforward and faster test will suffice to run. Once you implement a more extensive feature, you can run the more convoluted test on the same codebase.

It is essential to understand that the same codebase that you are refactoring is the same in production, or else the tests are not relevant to the production version.

How do you actually add value through machine learning in practice?

First, there is a business problem and an idea of how to solve it.

The idea is justified by the problem and found unsolvable by traditional software engineering. The primary justification of data defined products is that data is available and imperative solution attempts failed or deemed cumbersome.

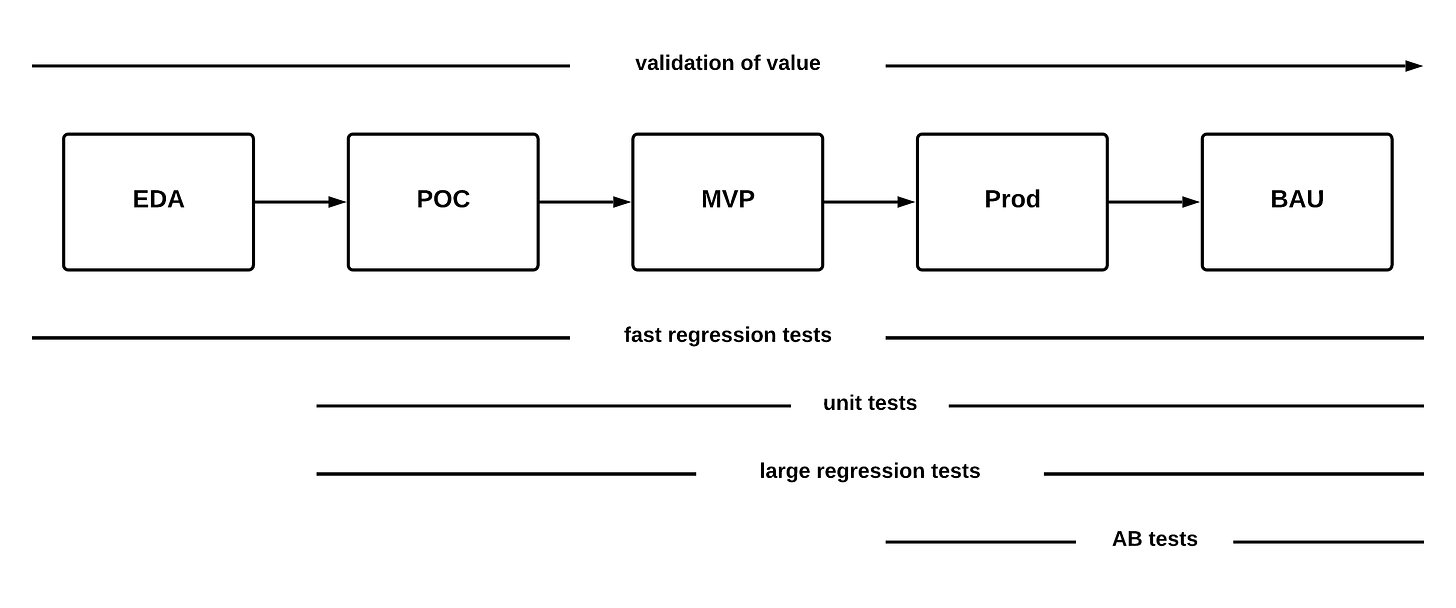

So the DSes start their explanatory data analysis (EDA) phase that hopefully turns into a proof-of-concept (POC) phase. During these periods, DSes start making assumptions about the data and the solution and write some kind of pipeline. If they stick to code quality principles, they can efficiently iterate and improve their results while preparing for productionisation. Most of the time, the POC cannot prove value on its own; it only proves the opportunity of value. You can only prove value in production by observing changes in the KPI, often through A/B tests to be sure.

If the POC results look promising, the project moves to the minimum viable product (MVP) phase. The MVP is at least partially in production but definitely as close to it as possible. The DS team must keep iterating while carrying with them all the assumptions, code, and effort put into the project through the EDA and POC phases. They also need to make sure that other production specific assumptions are fulfilled. The best way to do this is through continuous testing and reevaluation.

The MVP is validated through a series of A/B tests. Often in production, critical problems are identified that can impact the feasibility of the project. These need to be quickly fixed, and again all previous assumptions rechecked. You don’t want to do this from scratch every time. New previously unseen opportunities can emerge and incorporated into the solution. MVPs are often rushed forward even on speculatory POC results because of the anticipation of these unforeseen complications. If you can only have a complete picture when your solutions are in production, your goal should be to get to the MVP phase as quickly and as cheaply as possible and get validation there. You can still shut down the project, and because of the efficient delivery pipeline, you didn’t waste too much on each unit of MVPs.

Once the MVP approved and the A/B tests passed, it goes into full production. Hopefully, nothing radical happens here, but still, edge cases can cause problems stemming from being exposed to every use case. Given the solution is exposed to the entire userbase, these need to be fixed quickly and efficiently and push the change through the whole pipeline rechecking every other assumption again. Not an easy feat without a high quality codebase.

Still, productionised solutions are not static, the world is changing, and the environment is dynamic. In this business-as-usual (BAU) phase, DSes monitor their systems and evaluate if their test environment is still representative of the production one. They also get feature and improvement requests to implement the same way as before: pushing them through the entire testing cycle.

Conclusion

As you can see from the above, in practice, the codebase can reach production very fast because that’s where real validation happens. That’s where the real value is added through frequent updates which happen on the same codebase. If you stuck in the POC phase, you are left guessing the size of the opportunity. If you rush into production with a low-quality codebase, you will be unable to respond to unforeseen challenges.

Next Steps

This post showed how productionised machine learning is different from data science analysis and similar to software engineering. Taking techniques from software engineering and adapting them to data defined products enables you to efficiently deliver value and justify investment into a high quality codebase. In the following posts, I will be writing more about tactical (code writing) and strategic (solution design) aspects of the paradigm. Please share and subscribe to be notified.