Simple trick to optimise code and maintain readability in a compute heavy application

2022-01-22

[Edit]

Join our Discord community, “Code Quality for Data Science (CQ4DS)”, to learn more about the topic: https://discord.gg/8uUZNMCad2. All DSes are welcome regardless of skill level!

[Edit]

“Premature optimisation is the root of all evil.”

Apparently, this famous quote is by Sir Tony Hoare (and not by Donald Knuth, who nevertheless popularised it).

The general advice regarding performance optimisation is to wait with it until the very last minute when you have no other choice. But what happens when you work in compute-heavy research problems where solutions might not be feasible without early tweaks and hacks?

Performance vs Readability

You face a twin challenge in research application where high performance is critical from early on, yet you need to prepare to change your code as your research direction suggest.

The problem is that performance hacks usually ruin readability and maintainability, which are both critical for agility (the capability to change). On the other hand, codecraft doesn’t concern itself with performance.

There are many techniques like decoupling, single responsibility and others that can help you even in a research context. Here, I will focus on a single one that is particularly useful in relation to algorithms.

The Strategy Pattern

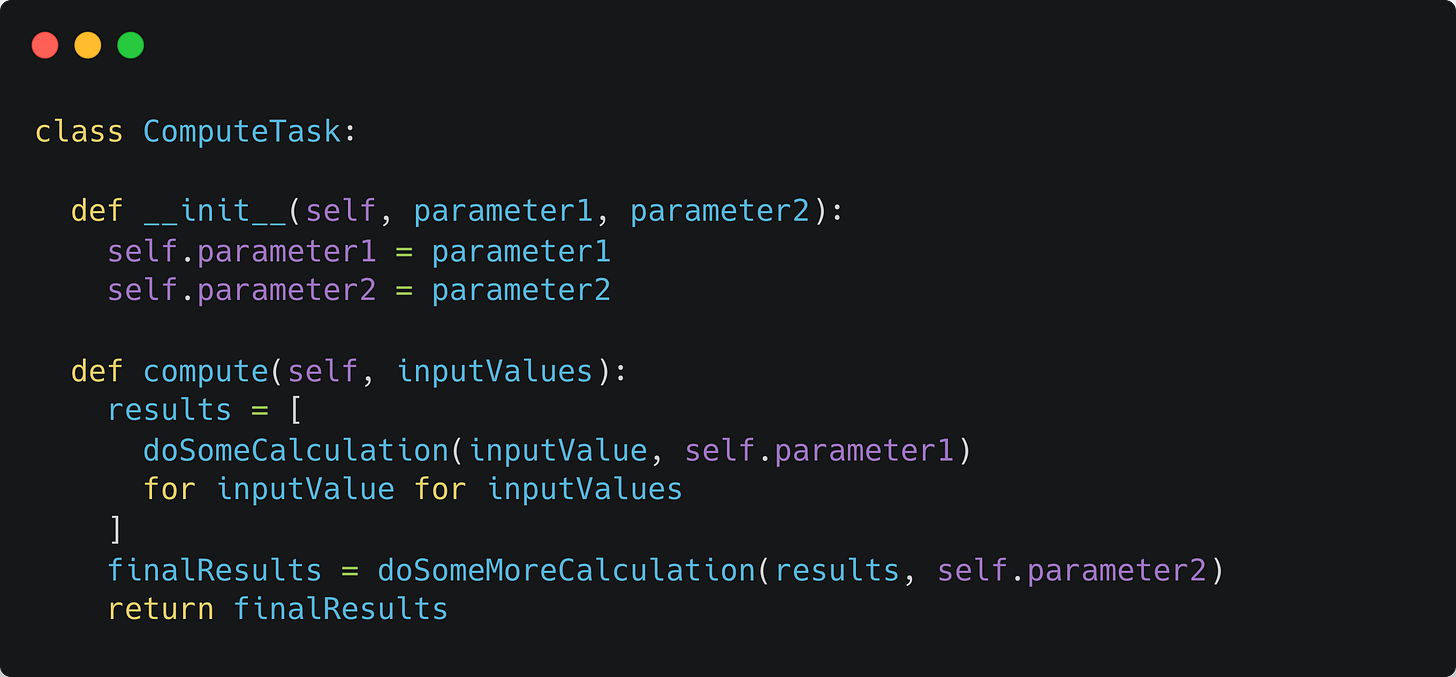

According to Wikipedia: “the Strategy Pattern is a behavioural software design pattern that enables selecting an algorithm at runtime.” What does this mean in practice, and how will it help us? Let’s take a look at this code:

It looks pretty straightforward; we have some function that calculates some results from a set of inputs and some additional parameters. The part we want to optimise is dependent on a parameter (parameter1) and the rest of the calculation has another parameter (parameter2) that is used downstream from the optimised part.

For this post, I will pretend that swapping that loop for a list comprehension is the only performance hack needed.

First try: Swap out the critical section

“Let’s not complicate things.” said the researcher and quickly overwrote the original implementation:

Immediately facing the question: “How do you know it still does the same thing as before????” Of course, if all you do is swap a loop with a list comprehension, this is not a big deal, but anything more complicated and you will be in constant worry.

Second try: Switch between the two versions

Let’s put back the original version and switch between them with a boolean variable. Write this “debug” switch into every script and every place where ComputeTask is instantiated. Run the task in both “modes” and compare the results:

First complication: Branch dependent parameters

Let’s say that we found that to fine-tune the efficient branch, we need to introduce a parameter that is specific to that branch:

Now, this starts to get messy. We are dragging on the inefficient solution just for comparison while the parameters of the efficient solution begin to mix into the code. If you have more than one branch, you can get easily confused.

Enter the Strategy Pattern

On the surface, this is a remarkably simple idea: wrap the critical part into a class. But why would that help, you can ask? Let me show you (Added __main__ section for the declaration of the task):

This looks much longer, but the two solutions are separated. They are still mixed in the ComputeTask object. So let’s do another round of refactoring championing the “Complex behaviour is composed” paradigm. Instead of declaring the classes inside the ComputeTask, move it out and pass them on at construction time.

What changed? Parameters are passed directly to the right place where they will be used, and the conditional branch is gone. The actual behaviour of the task is set at declaration, but itself is not aware which type it will execute. But there is a problem. With the debug switch gone, there is no way to run a test.

Not to worry! With the implementation of the Strategy Pattern, you can create new behaviour and use it without changing anything else:

The TestAlgorithm is just a wrapper that runs both algorithms and compares the results. You can seamlessly test any algorithms without it being aware of the test! Highly desired behaviour. Now you can make tweaks and leave the rest of your codebase unchanged. You can also have a continuous test setup away from your codebase that you regularly run just in case.

And that’s it. Hopefully, you found it useful and will use it creatively to solve future optimisation or code where optionality is needed.

Thanks for reading this post. I hope you found it helpful, and if you would like to read more, take a look at my series on how DSes can refactor their code for better productivity:

I regularly write about productionised Machine Learning and numerous related topics like code quality, MLOps and ML product management. Subscribe if you would like to be notified:

I like your examples using design patterns in general but I'm looking for your opinion on something.

Considering we are working with a dynamic language like Python, should we embrace duck typing or try to structure code/classes more akin to statically typed languages?

For instance, in your example I've noticed you don't define a common interface (defined as an abstract class in python, as interface classes don't exist) that the concrete algorithms must implement.

I've noticed that examples in python on websites such as https://refactoring.guru/ generally include abstract interfaces and the likes.

What is your take on this? Just keep it simple and take advantage of duck typing?

Thank you Laszlo!